|

I am a Research Scientist at Adobe Research. My research interests lie in the fundamental performance of visual generation models and enhancing AI creativity. Recently, I have been exploring Interactive Video Generation and World Models. I completed my Ph.D. at CUHK, advised by Prof. Jiaya Jia, where I worked on visual generation and multimodal LLMs. I was a research assistant at the BAIR, UC Berkeley, focusing on early-stage Test-Time Training (Tent). 💡 We are hiring self-motivated and creative interns. If you are interested in an Adobe internship or a university collaboration, please feel free to contact me. |

|

Rolling Sink effectively scales autoregressive video synthesis to ultra-long durations (5-30 minutes) at test time, with consistent subjects, stable colors, and smooth motions.

EditVerse unifies a diverse range of generation and editing tasks for both images and videos within a single, powerful model.

We demonstrate that through a careful design of a generative video propagation framework, various video tasks can be addressed in a unified way by leveraging the generative power of such models.

Jenga accelerates HunyuanVideo by 4.68-10.35x through dynamic attention carving and progressive resolution generation.

Add 'Lego' attribute to the child, an edited video is generated. Powered by a novel video inversion process and cross-attention control. We also find that a Decoupled-Guidance strategy is essential for video editing.

Rethinking the inversion process. Boosting Diffusion-based Editing with 3 Lines of Code.

PS-VAE introduces a semantic-pixel reconstruction objective to regularize the latent space, enabling compression of both semantic information and fine-grained details into a compact representation for SOTA T2I and editing.

HBridge introduces an asymmetric H-shaped architecture that bridges heterogeneous experts through mid-layer semantic connections, achieving superior unified multimodal understanding and generation with lower training cost.

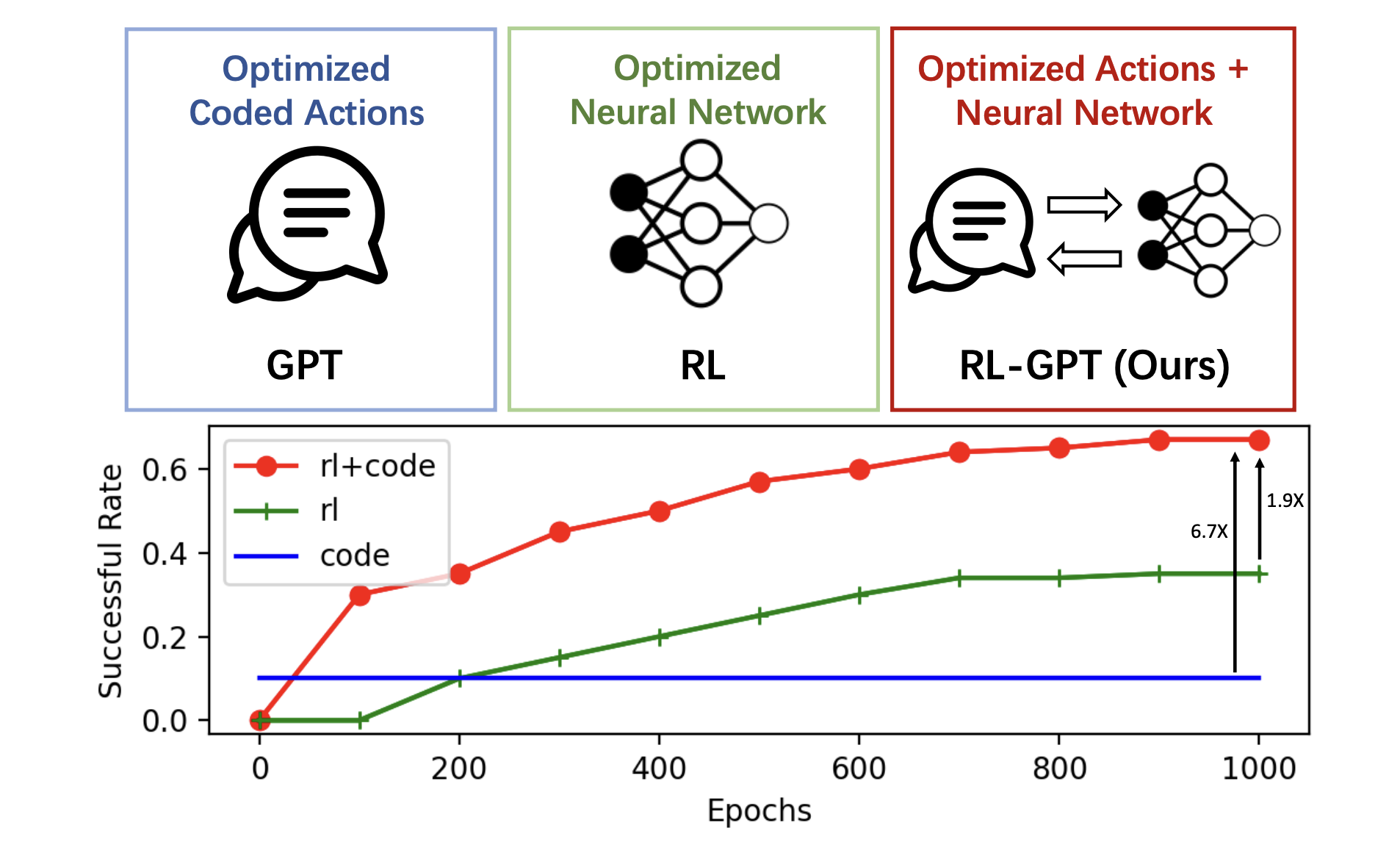

The slow agent decomposes the task and determines "which actions" to learn. The fast agent writes code and RL configurations for low-level execution.

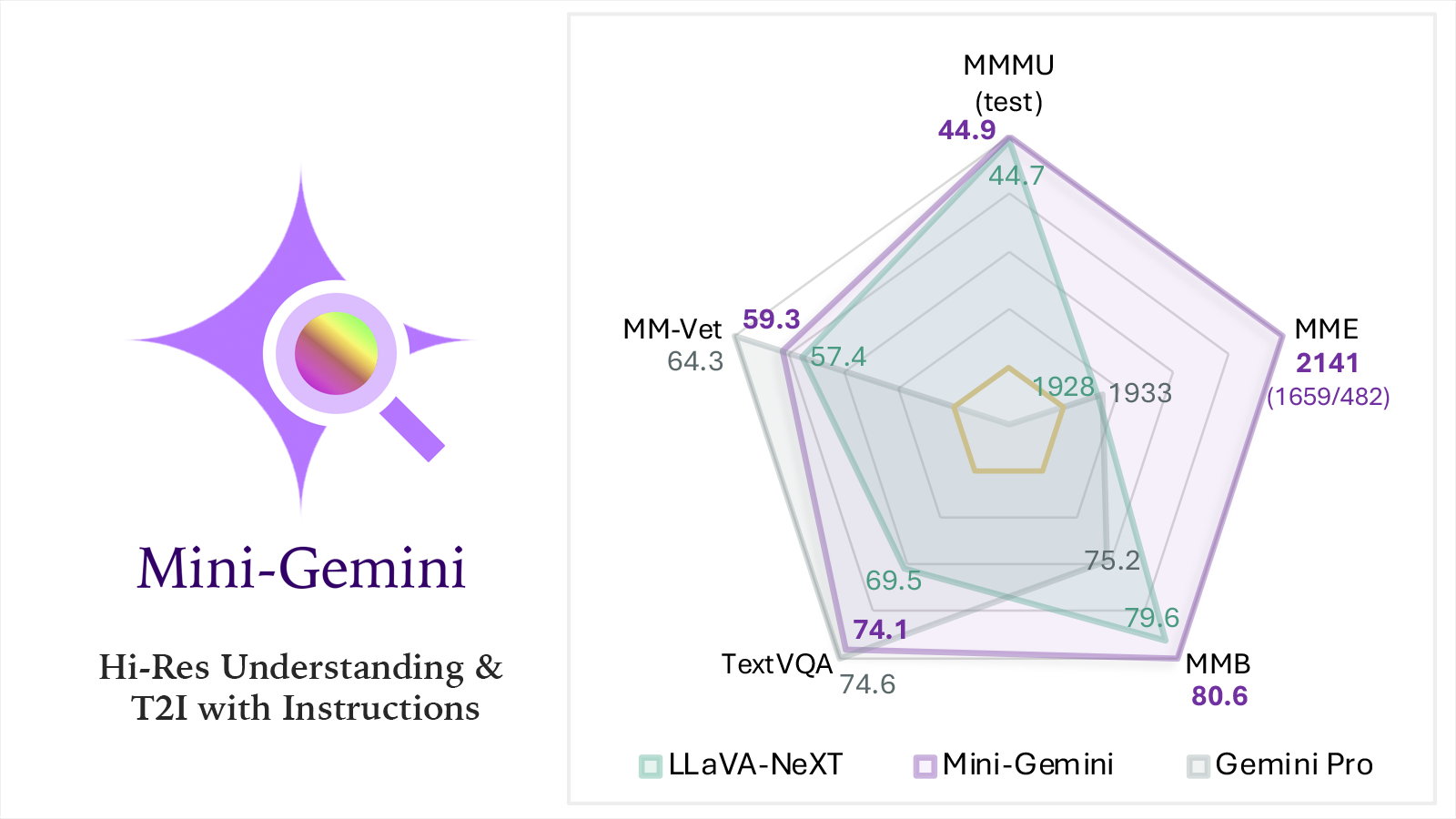

Mining potential of open-source VLMs! Mini-Gemini is a novel framework ranges from 2B to 34B VLMs for hi-resolution image understanding. It has an impressive OCR capability, and can generate HQ images powered by its multi-modal reasoning ability.

Project Frame Forward applies changes across entire videos based on one annotated frame and a simple text prompt, bringing the precision of photo editing to video.

Adobe Firefly Image-to-Video turns static images into animated video clips with AI-powered motion, depth, and cinematic flair.

Adobe MAX 2025 Sneaks, Adobe, 2025

Doctoral Consortium, ICCV, 2025

Most Influential CVPR Papers, Paper Digest, 2024

Excellent Teaching Assistantship, CUHK, 2023

Hong Kong PhD Fellowship Scheme (HKPFS), 2021

Vice-Chancellor's Scholarship, CUHK, 2021

Scientist Scholarship of China (top 1%), 2019

Top 10 Undergraduate of XJTU (top 0.1%), 2019

National Scholarship of China, 2018, 2019

|

ENGG5104 | Image Processing and Computer Vision | 2023 Spring ENGG2780A | Probability for Engineers | 2022 Spring CSCI1540 | Computer Principles and C++ Programming | 2021 Fall |

|

|